Visual assessment based on intraoperative 2D X-rays remains the predominant aid for intraoperative decision-making, surgical guidance, and error prevention. However, correctly assessing the 3D shape of complex anatomies, such as the spine, based on planar fluoroscopic images remains a challenge even for experienced surgeons.

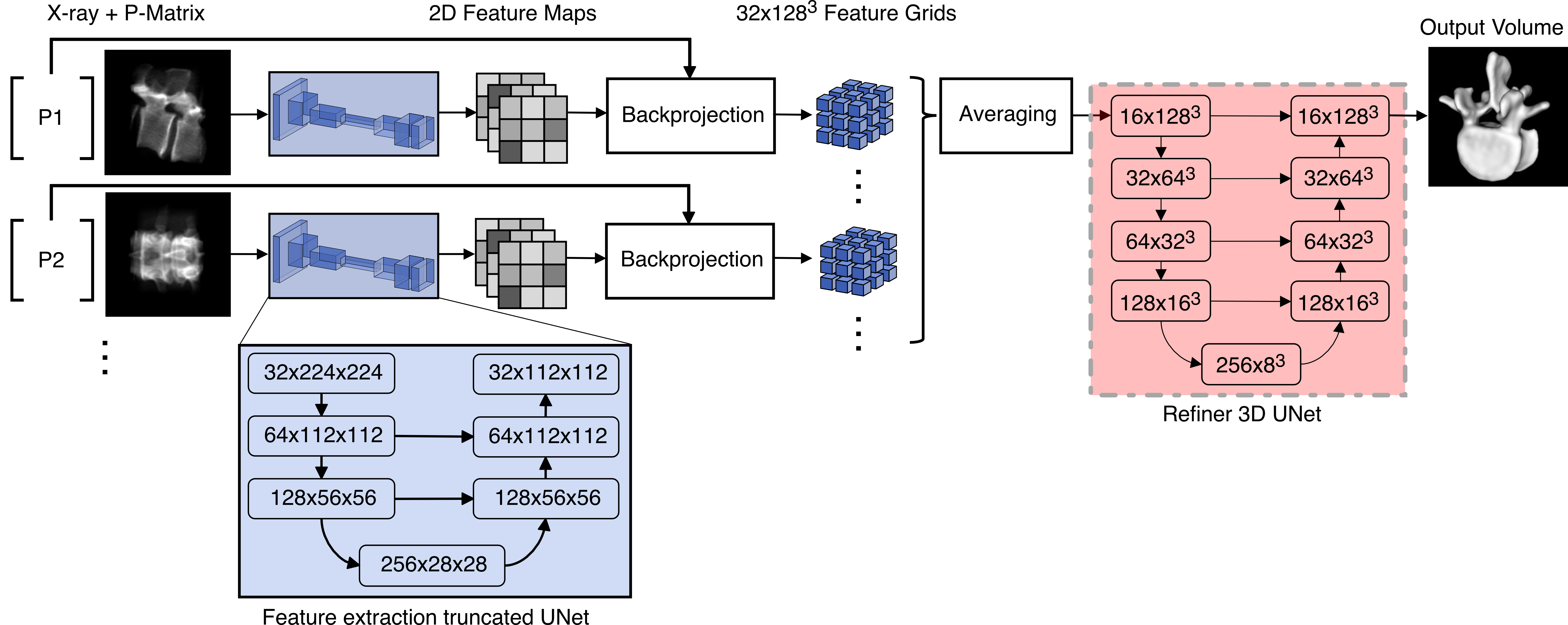

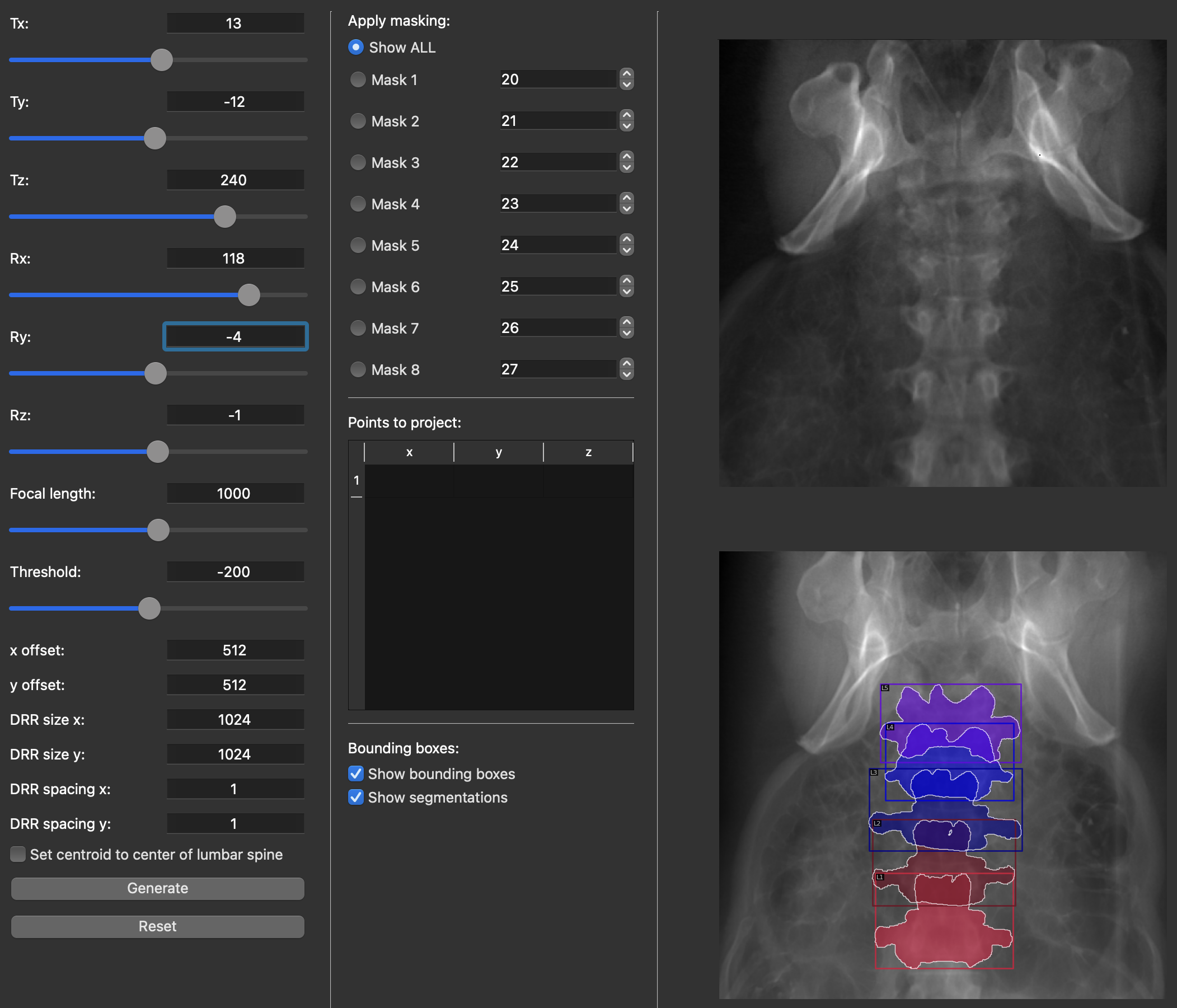

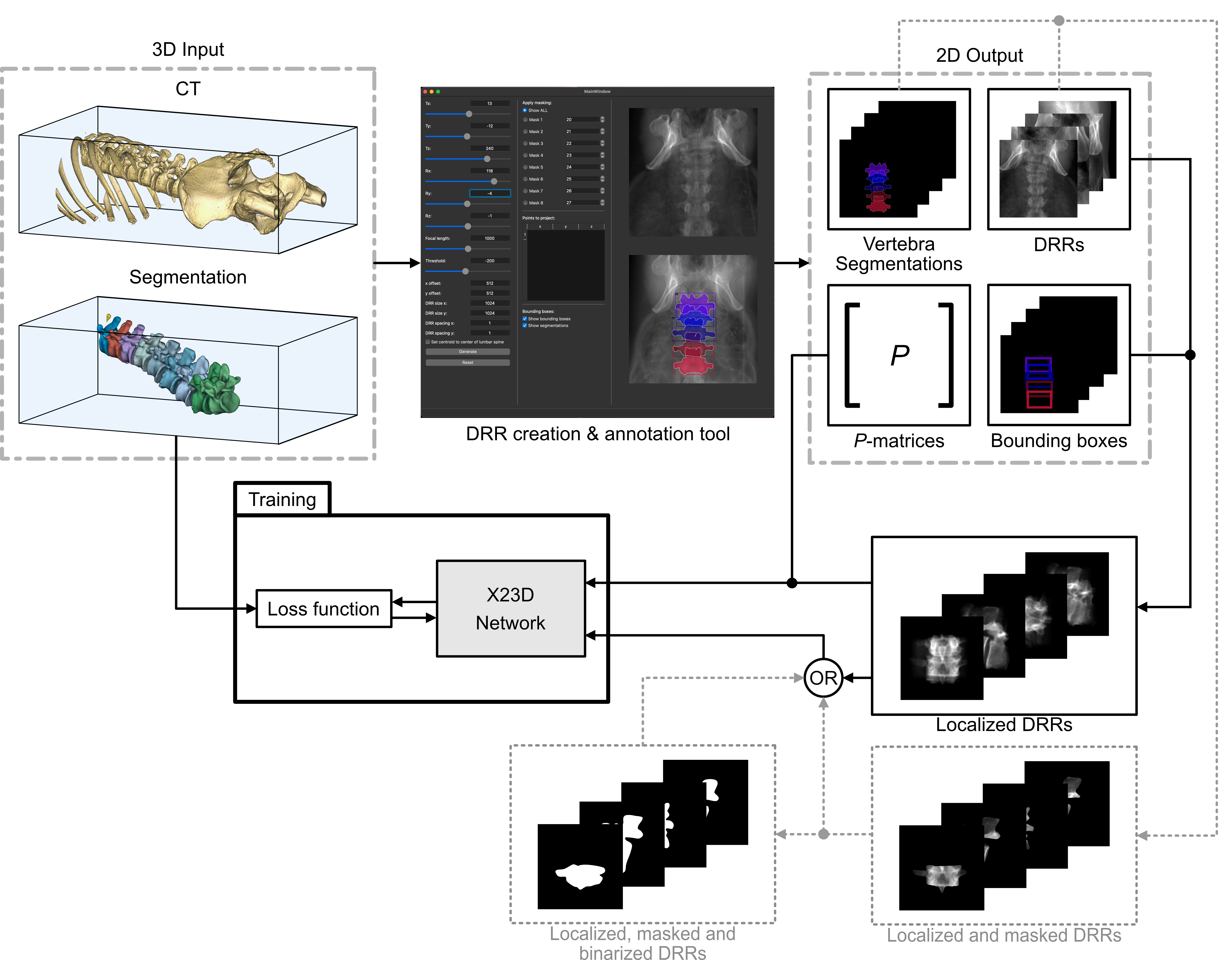

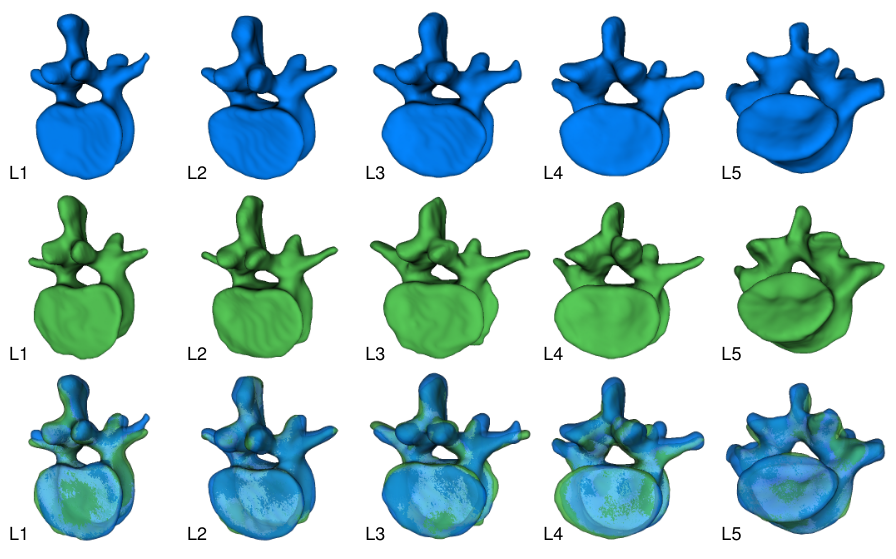

This work proposes a novel deep learning-based method to intraoperatively estimate the 3D shape of patients’ lumbar vertebrae directly from sparse, multi-view X-ray data. High-quality and accurate 3D reconstructions were achieved with a learned multi-view stereo machine approach capable of incorporating the X-ray calibration parameters in the neural network. This strategy allowed a priori knowledge of the spinal shape to be acquired while preserving patient specificity and achieving a higher accuracy compared to the state of the art.

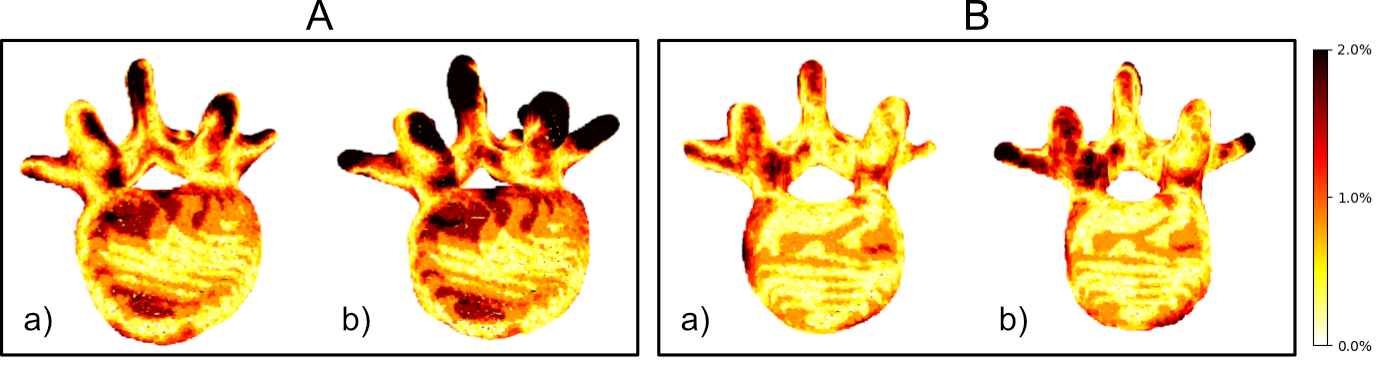

Our method was trained and evaluated on 17,420 fluoroscopy images that were digitally reconstructed from the public CTSpine1K dataset. As evaluated by unseen data, we achieved an 88% average F1 score and a 71% surface score. Furthermore, by utilizing the calibration parameters of the input X-rays, our method outperformed a counterpart method in the state of the art by 22% in terms of surface score.

This increase in accuracy opens new possibilities for surgical navigation and intraoperative decision-making solely based on intraoperative data, especially in surgical applications where the acquisition of 3D image data is not part of the standard clinical workflow.

Citation

Jecklin S, Jancik C, Farshad M, Fürnstahl P, Esfandiari H.

X23D-Intraoperative 3D lumbar spine shape reconstruction based on sparse multi-view X-ray data.

J Imaging. 2022 Oct 2;8(10):271.